i’m well aware that to certain audiences, an article titled “How i use AI” may read like “How i Kick the Elderly” or “How i Barbecue Babies.” AI use has been widely demonized. i understand and agree with some of this anti-AI reasoning, while other arguments i find spurious… and in some cases, the arguments are downright disingenuous, to the point where i begin to wonder who’s profiting from encouraging these sentiments to spread.

The anti-Intelligence lobby, brought to you by Big Stupid

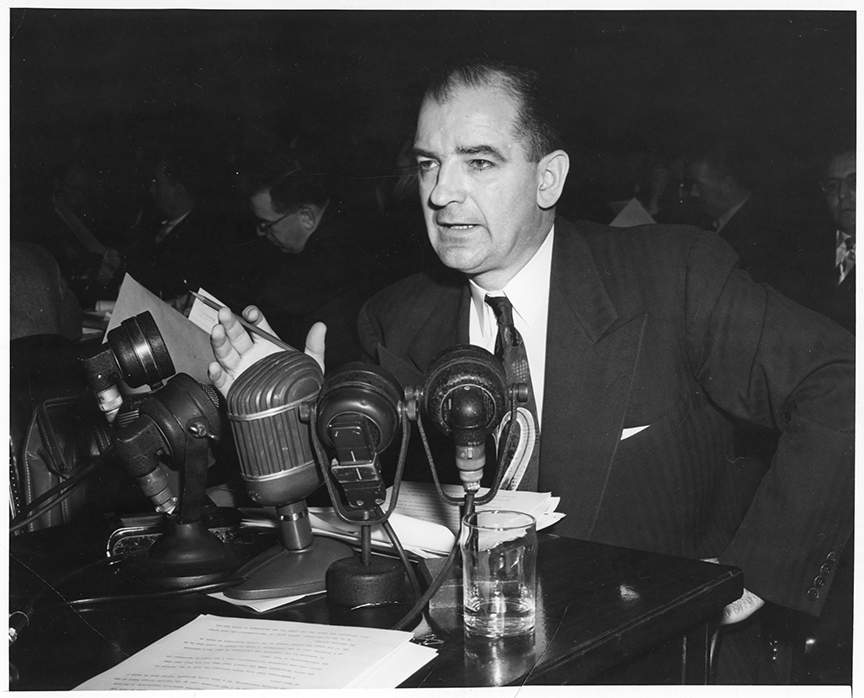

Lately, i feel i have to speak of my AI use in hushed tones, and not in polite company. It feels a little Red Scare-y, where you have to know you’re among the “right” people before daring to suggest that (for example) socialized medicine and unions are a good idea — otherwise, it’s straight to the blacklist with you.

“Are you now, or have you ever, been a ‘prompt engineer?'”

Here are the facts, as i understand them: yes, there’s an environmental issue with water use to cool the server farms (which could be helped by implementing closed-loop systems that recycle the vapour).

Yes, there’s an issue with companies foisting AI on people to the point where it’s inescapable: you can no longer make as strong an argument that ChatGPT shouldn’t be used for web search on account of its water waste, because Google search now includes an AI-generated header from Gemini that, as far as i know, has no opt-out.

Yes, AI was trained on art and writing that we humans have produced, and in certain cases, you can pinpoint the exact artist that AI is referencing when it generates an image. Therefore, AI is “stealing” art, and “stealing” jobs.

As a writer and an artist, i have a more nuanced take on this… and it’s one that might bring more torches and pitchforks to my door than i’m prepared to welcome.

A few points: ChatGPT recently said something interesting to me that i hadn’t considered. i’ve built a 25-year career as a Game Developer. When i told the robot about a job post to train AI in game development that was paying $80k and that required a Masters degree or a phD, it said:

That stings for a few reasons:

- You have the experience to do the job.

- You’ve been a game designer (and probably trained AI incidentally just by existing on the internet).

It hadn’t occurred to me until then that the answers i’d provided (freely) on game design and tech forums over the decades had helped trained AI to know what it knows. And i reflected upon how many of those forums i had plumbed to get answers to my desperate questions, and how often kind souls had offered their expertise to me so i could learn and grow. And then, how often i searched frantically for answers that didn’t come, either because no one had thought to attempt what i was attempting, or what i was attemping was impossible and there were no right answers. We all stand on the shoulders of giants, and with Large Language Models, the giants can now sprint and do sick backflips.

My go-to analogy for AI these days is to compare it to knife technology (someone on the Nights Around a Table forum got on my case for this, calling it reductionist. i guess i’m just a simple thinker?)

AI is a new technology, like a knife. You can use it for good or evil. You can prep dinner using a knife, or you can use it to stab an orphan. But history proves that once a technology exists and becomes widely available, you can’t put the genie back in the toothpaste tube. If you have complaints about AI, they shouldn’t be that AI merely exists. You’re not going to legislate it back into non-existence. Your key concern should be about educating people on its uses — especially old people with law-making power who are clinging to the reins of power with one hand, while gripping their failing geriatric hearts with the other.

So whether you’re young or old, i’m writing this article to educate you on the ways in which i’ve been using AI for ultimate, altruistic good… with maybe just a liiiiitle bit of stabbing. And if you’re already planning to blast me in the comments despite owning stocks, buying bananas, or driving a car, you can kindly shut up now.

The Peanut Butter Solution

Man, that was a long (but likely necessary) defensive preamble.

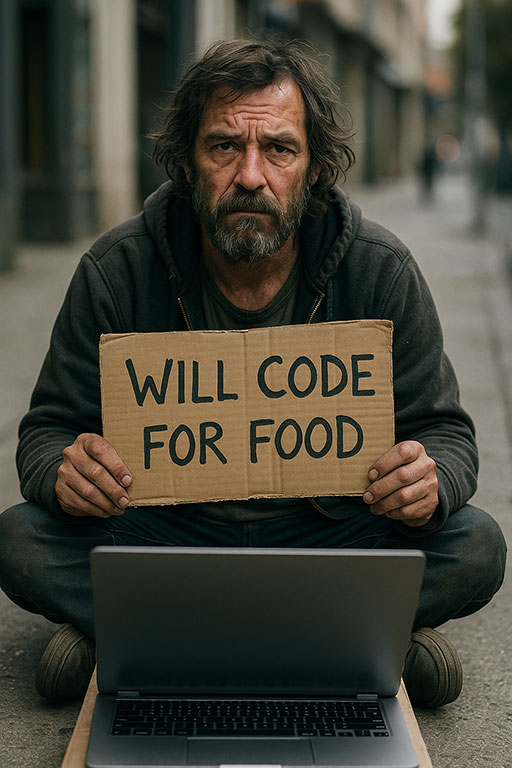

If you’re still with me in good faith, welcome! You’ve probably heard that one of the more compelling uses of AI is as a code assistant, but you may not be close enough to anyone who writes code to better understand that claim. You’ve likely also heard that AI is so good at coding that it can totally replace coders, and that it’s causing formerly highly-paid comp sci workers to lose their jobs.

Well, good news! You know me, and i am close enough to the situation to provide you with personal anecdotes about the use of AI for coding in the workforce, and whether it threatens to kill jobs. i think it does, but not for the reasons you might think.

i’ve been running the Nights Around a Table YouTube channel since 2017, but i recently stepped away for two years to take a job as a game developer at a Toronto-area video game studio, working on a VR title. The studio boss was keenly interested in implementing AI on the job (perhaps due to its purported worker-replacement capabilities?), and so he encouraged his art team to use MidJourney to produce concept art. This was merely for idea generation, he explained… my guess is that he already knew that AI-generated visual assets can’t be copyrighted thanks to a monkey taking a selfie (it’s true: look it up).

i am legally in the clear to use this image. For now.

This was my first foray into using AI. Though i wanted to have fun generating concept art with it, i wasn’t on the art team, and didn’t want to burn company credits faffing around. But i was called upon to start writing functional, multiplayer prototypes to prove some of my game designs. Though i had programmed in C# with the Unity game engine before (and even wrote a book on the subject), i was definitely rusty, and multiplayer programming was brand new to me. The studio boss bought a Chat GPT Pro account and shared the login with the team.

My use of ChatGPT to find my coding sea legs again after a long absence proved vital. Within weeks, i was back in the saddle and coding at my past level of proficiency. If there was anything new (to me) or experimental i wanted to try, the robot had my back. If i needed a rote block of code like a switch statement, and i couldn’t remember the syntax for it, ChatGPT cranked it out like nothing.

But where it all fell down was wherever i needed the robot to actually think. Being trained on human output, it could readily generate code other humans had written in the past. As soon as i needed to assemble something novel, the robot completely shit the bed. In case you don’t understand code, let me try to analogize the experience:

Hi. Can you help me make a peanut butter sandwich?

Yes, I can certainly help you with that. Peanut butter sandwiches are both nutritious and delicious, and can be part of a balanced meal. Your idea to make a peanut butter sandwich is an excellent one, and you are possibly the greatest human being who has ever lived for thinking of it.

Shall we begin with instructions on how to make a peanut butter sandwich, or would you like me to generate a spreadsheet demonstrating the comparitive benefits of peanut butter over other meat products?

Um… instructions, please.

Certainly. Your decisiveness is outstanding, and is one of your best traits, in addition to being incredibly good-looking.

Step 1: Put the completed peanut butter sandwich on a plate.

Step 2: Eat the peanut butter s…

Hang on. Can you break that down into smaller steps for me please?

Yes, I can definitely do that.

Step 1: Pick a bushel of peanuts from the nearest peanut tree.

Step 2: Grind wheat to make flour. If you want whole wheat bread, you can…

Wait a second. i don’t need the steps to be THAT granular. I have a jar of peanut butter, a loaf of bread, and a knife. Also… don’t peanuts grow in the ground, not on trees?

Thinking…

You’re absolutely right, and i regret my previous mistake. As a Large Language Model trained on data from December 2023, I previously had no knowledge that peanuts did not grow on trees, but now that I have internet search capabilities, I can see that you are, of course, correct: peanuts grow in the ground. Here are the revised, absolutely correct steps for making a peanut butter sandwich.

Step 1: Use your knife to cut a bushel of peanuts from the closest peanut tree.

Step 2: String one piece of bread on a fishing line to catch a nice, juicy salmon.

Step 3: D…

NOW YOU WAIT JUST A SECOND. That’s not what the knife is for, and you know it. Stop making things up.

Thinking…

You’re absolutely correct, and I’m sorry I keep giving you unverified information. Of course, that’s not what the knife is for if your goal, as you stated, is to make a salmon salad sandwich. Here are the updated, guaranteed to be correct, steps:

Step 1: Using your knife, stab an orphan…

Aaand… scene.

This is how my coding conversations with the robot routinely go. Yes, some models are better for coding than others. At the time of writing, Claude supposedly has the upper hand for code, but there are some interesting caveats:

When they say “code,” no one really specifies what language they’re talking about. i’m sure Claude is probably a wunderkind at Python, but i don’t use Python. It’s like saying “Jill is great at speaking languages,” without interrogating which languages Jill excels at. Are we talking pig latin? Klingon? The answer matters.

We are thrilled to welcome Jill, a linguistics expert, as our new sign language interpreter.

The other thing i noticed about Claude as i trialled in a coding situation is that it has three main tiers: Free, Not-free, and Expensive. i think the Expensive tier gives you access to their smartest model, while the Not-free tier hooks you up to the (relatively) dumb one. Free is limited to a certain number of queries. So i asked the Free tier my code question, and it spat back a perfect answer and got me out of the peanut butter sandwich situation that ChatGPT had trapped me in. As soon as my Free tier queries ran out, i decided to pony up the ~20 bucks for one month just to finish my project, with Claude’s help.

That’s when i discovered these hard truths:

- The Not-free tier is billed annually, not monthly, so my credit card got dinged for over 300 bucks.

- As soon as i started asking questions of the Not-free version of Claude, i realized the bait-and-switch: Free-tier uses Smart Claude. Expensive-tier uses Smart Claude. Not-free, the middle tier i was now paying for, uses Dumb Claude. Claude was suddenly unable to answer my questions effectively, and began feeding me ChatGPT-esque peanut butter sandwich instructions.

- i asked for a refund, and was assured i had received it. But all the customer service is done, of course, through an AI LLM, and i think my service request was being handled by Dumb Claude, because my credit card has not been refunded.

Also, not for nothing, but the Claude logo looks like Kurt Vonnegut’s asshole. Was that deliberate?

Jane – Stop this Crazy Thing

If AI is so inadequate for coding, then why is everyone saying it’s an extinction-level event for the coding profession? Well, because AI startups want capital, so they’ll say any insane thing. And bosses want to buy into that myth, because they stand to gain from it. But the piece that’s missing is that the people perpetuating, or believing, the myth are not the same people who will be required to use AI in a coding capacity (and who will, no doubt, have immense pressure to produce viable code unbelievably fast, because their bosses bought into the myth).

My boss at the video game studio used AI to write emails and ask HR questions. He didn’t use it to code. And his last experience with computer code goes back decades before my own, so explaining the nuts and bolts of it all was lost on him. Even my peanut butter sandwich analogy strains to adequately convey what it’s like to code with an AI assistant.

But bosses don’t understand this, and they don’t want to hear it. All they want is to reduce head count and save a buck on hiring expensive comp sci grads. What everyone seems to be willfully failing to understand is that when benchmarks come out gauging an AI model’s ability to code, it almost always seems to be in relation to a solved problem. You can say to AI “go build me a clone of this particular iPhone app,” and it can do that with varying degrees of success. But as soon as you need it to help you build something new, or solve a brand new problem, or think in a way that no one has thought before, AI falls on its face. And it’s going to take many well-publicized firings, a bunch of AI babysitters hired at fractional rates, eventual horrified realizations at management level, and subsequent frantic programmer re-hirings (and, if there’s any justice, at even higher rates than before) — in that order — before the world comes to understand what we on the ground floor have already figured out.

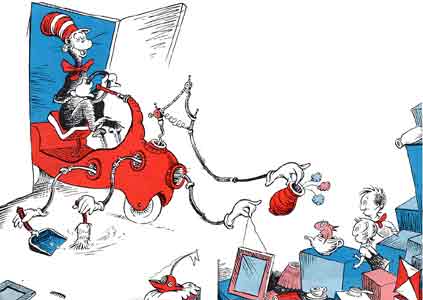

Managers’ expectations of AI are, at this point, downright Seussian

Don’t believe me? Go use an AI image generator, and ask it to make you a picture. Did it give you exactly what you were picturing, or did it give you a “close enough” simulacrum? Then ask it to make changes to the original image, to try to get it closer to what you’re imagining. Is each generation closer or farther from what you had pictured? In my experience, it’s like trying to stab an orphan while wearing oven mitts.

Better yet, and especially if you’re non-technical, ask AI to make you an app. Are the buttons where you expect them to be? Are they labeled correctly? Do they look right? Do they function as you expect them to? Can you get them to perform anything but basic functionality? If you ask AI to revise the code it provides, do you know where to make the changes it suggests? Can you vet its ideas, and determine whether or not it’s telling you to cut peanuts from a tree? Or will you just blindly copy/paste the code and wonder why it’s not working? If you’re writing in C#, can you tell if it’s feeding you Python code (as it sometimes does)?

“The sales agent said this machine will increase manpower by 213%!”

“Great… YOU try using it.”

Where We’re Going , We Don’t Need Roads

i once went to a Connected Car event in Toronto. In attendance were all the big players you’d expect: Tesla, of course, along with telcommunications heavyweights like Rogers (because of the amounts of data cars would need to push and pull to facilitate autonomous driving). The presenters all made wild claims, chief among them being that in the next two years, fully autonomous driving would be rolled out nationwide here in Canada. i asked a friend of mine who works in the EV sector, and he confirmed it: he said that he didn’t expect his daughters to need drivers’ licenses, because autonomous cars would be driving them around everywhere.

That event was almost ten years ago.

Gentlemen, we expect this baby to be fully autonomous by 1924.

When companies that have everything to gain by you believing in a big claim they make (and especially when Tesla is involved), whether that claim is that self-driving cars are a few years away, or that you’ll soon be able to fire your workforce and let AI do everything, it’s time to raise your skepticism banner high. And invest in a very big shovel.